Tuesday, 23 December 2008

hmong in french guiana

Desperately poor, and utterly deeply fascinating the history of that area got well under my skin and I've maintained an interest in the area ever since, including the various hill tribes of the area, including the Hmong.

The Hmong basically backed the wrong side. Some stayed, a lot left. While I knew that many had relocated to Nebraska, not always happily, what I didn't know was that some were happily farming vegetables in French Guiana, and generally making a go of it ...

Monday, 22 December 2008

metaphors, not interfaces

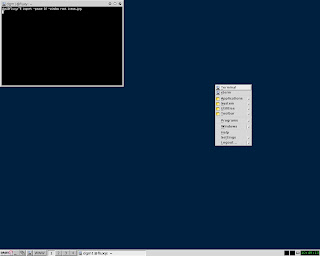

|  |

| - icewm - | - fluxbox - |

a few weeks ago I blogged about how interfaces had to look real - and I'd still stand by that but I've been thinking some more about this.

When we talk about interfaces for computer operating systems we really mean the desktop/window manager, and what we're really talking about is a set of expectations, which are to large expect governed by XP.

Everyone has used XP, everyone knows how to find their way around XP via the start menu. Other well known interfaces work the same way, KDE has a start menu, gnome as pull downs as does OS X.

Interestingly, though apocraphally, apparently it's easier to move first year information sciences students across to kubuntu than straight ubuntu, for the simple reason it's more XP like in appearance, and XP is what they overwhelmingly use in school.

So we could say we have two common metaphors, the XP metaphor and the gnome/OS X metaphor. Makes one wonder how quite a different minamalist desktop, eg icewm, would fair in usability testing - given that it breaks the set of expectations, the metaphor, that makes something intuitive.

Same goes for browsers. Same goes for word processors. People could move to Open office easier than Word 2007 purely because it was closer to their expectations as to how menus were structured. Even mobile phones are prone to the same problem - most people know how to find their way round a nokia - give them a samsung and they're stumped.

So metphors are like memes, the collection of ideas that people have about how things are going to work and how things are going to be structured. Step away from the metaphor and people perceive it as difficult, need extra training etc, and hence the cost goes up, etc etc.

And this need for metaphor conformance means that everything ends up being the same - great for transition, poor for innovation, and makes radical change difficult, which goes back to my remarks about the linpus interface - they could have used the native xfce and probably got away with it. They could have customised it to make it look like XP, They didn't, they made something simple and self evident. What they didn't do was use a lightweight manager such as fluxbox or icewm.

Obviously I'm not privy to why, but I'm guessing metaphors had something to do with it ...

Saturday, 20 December 2008

austlang ...

Good to see a project that you were in at the beginning of finally ship.

Wednesday, 3 December 2008

Tom Graham

Tom was a decent man, a confirmed cyclist and most of all, an old school librarian, but one who understood fully the implications of digital media. I didn't know him particularly well, nor did our paths cross that often, but when they did he was always fair and never took himself too seriously. Famous, or perhaps infamous for once puzzledly asking 'what is telnet?' in the middle of a university computing committee meeting, Tom championed the use of digital resources in libraries and helped push the adoption of digital media.

And, underneath it all Tom was still a country boy. I'll always remember the summer morning when I turned up for work to find an escaped herd of cows blocking University Road and Tom is his suit enthusiastically shooing them off the road and onto some open grass beside the library.

Memories like that making working at a university utterly unique ...

Friday, 28 November 2008

social networking tools get the news out

The same sort of thing was seen in the Californian bushfires, and I dare say the Thai blogs are full of news from inside Bangkok International airport.

The point is that the technology makes it simple to get the information out and enable the viral spread of news. It's not journalism, it's information, and while there are risks in rumours, misreports and too many reports overwhelming people it provides a channel to get the information out. No more can bad things happen behind closed doors - as we see in the rise of social media in China, or the Burmese governments hamfisted attempts to block uploads of mobile phone footage. If the death of privacy also means the end of secrecy that might be a deal worth living with

Wednesday, 26 November 2008

It's the interface stupid

Building the imacs was useful - it demonstrated just how little cpu and memory you needed to run a desktop to do the standard things - a bit of text processing, web mail, google docs, and in my case a usenet news reader for the two posts a week I'm interested in. I'm now convinced that an eight year old machine can make a perfectly useful web centric device.

Likewise having built various vm's I've come to the conclusion that gnome and xfce are usable windows managers. Fluxbox and IceWM, both of which work and use even less in the way of resources than xfce are uable, but their a bit rough round the edges, and the desktop manager needs to be slick. Apple did this with aqua and convinced a whole lot of people that unix on the desktop was a viable option precisely by not mentioning unix and hiding it behind a nice interface. Microsoft to an extent tried to sell Vista on the idea that it had a slicker look than the XP interface, and of course we all know that the version gnome that ships with ubuntu has more than a passing resemblance to XP.

Interfaces need to be intuitive, and while they can be different from what people are used to they can't be too different - and everyone knows xp.

And that takes us to the Acer Aspire. A fine machine - so fine I might even buy one, but the interface, based on xfce is dumbed down - so dumbed down it doesn't look like a 'real' operating system, and consequently makes the xp version of he aspire look like a 'proper computer'.

Run xfce natively and it looks like a 'real' machine. The functionality is exactly the same, the applications work the same, but the interface makes it look second rate.

Now linux has a scary reputation out there involving beards sandals and unfortunate trousers. As I said, Apple got away with things by not mentioning that OS X is really BSD, so I'm guessing Acer decided that using Linpus was simple and wouldn't have a support load - the fact that if you want to do something sensible, like install skype, you're back playing with the command line shows that its dumbed down too far - a simple version of xfce (or fluxbox - after all if you can develop linpus you hve the resources to sleek up fluxbox) would have been better.

It makes it look like a real computer - and that's want people want, they want to look different, not a freak.

Tuesday, 25 November 2008

OpenSolaris 2008.11 RC2

Way back in May, I built an open solaris install using 2008.05 on virtualbox.

And after some pfaffing around it worked pretty well, even if t was just another unix desktop. Could have been Suse, could have been Fedora. Six months later and, while it's still only rc2 and not the final distribution version I decided to have a go building 2008.11. This is not a like for like comparison, while I'm again building it on VirtualBox I'm now using version 1.6 and there's always the possibility that Sun have improved its ability to run OpenSolaris distros.

The live cd image booted cleanly, and the Installer to install the system to disk was clean, self evident, and runs well, and seems slicker than the previous version and was reasonably quick, with no errors encountered. Ignoring the false start caused by my forgetting to unmount the cd image, the boot process was fairly slick and professional looking with a single red line in a rotating slider to let you know things were still going on (that and the hdd light). Like many modern distros there was little or no information about what was happening during the process (personally I like the reassurace of the old startup messages).

The boot and login process was utterly uneventful, with the vm connecting strisght to the network (unlike the slightly annoying debian and suse extra mouseclick). The default software install was utterly standard and gnome like, and again open office was not installed by default - probbably because it won't fit onto the distribution disk.

The package installer is noticably slicker with more software available and is intuitive to use.

Generally, it looks and feels faster than the previous 2008.05 distro and somehow looks more polished and professional. Definitely worth trying and building on a 'real' pc. Like Suse it probably wants more resources than ubuntu and really legacy hardware might struggle, but on recent hardware it should be fine.

Monday, 24 November 2008

google earth as a survey tool for roman archaeology?

And then I remembered a report from earlier this year of archaeologists using Google Earth to search for the remains of sites of archaeological interest in Afghanistan.

And then I had a thought. Is google earth good enough to search for the remains of missing Roman period remains, not to mention celtic hillforts and the like. If so one could imagine people doing detailed scans of individual 1km blocks to look for likely sites.

After all they tried the same thing looking for Steve Fosset. It might just be work as a strategy.

perhaps I should try it ...

Sunday, 23 November 2008

PPC imac redux

So got it home, turned it on, and found it whined - although it did get better as it settled down, so possibly it's just that the bearings in the fan dried out or something more serious - after all when you pay $10 for something it's no great loss if you end up cannibalizing it for spares.

So, put the ubuntu cd in, held down C as it booted to force it to read the cd. Not a bit of it - it had been configured to boot off the network by default. OK reboot it holding the option key down to get it to scan for a bootable system either on the disk or a cd.

And this is where it got fun. The firmware was password protected, and of course I didb't know the password. However Google was my friend here and came up with a couple of sensible links as to how to reset the password. Now in the instructions it says first add or remove memory from the machine. The machine only had a single 128MB DIMM. At this point I had a senior moment and removed the DIMM. Not surprisingly the machine failed self test as it didn't have any memory in it at all.

So the problem became where to get additional memory. Fortunately it used pc100 DIMMS just like $83 linux machine, so I 'borrowed' some memory from it, brought up the new imac, reset the PRAM, returned the borrowed memory and then rebooted into open firmware, forced a scan, and hey presto we were installing linux.

Well we were trying to install linux, since it was (a) a newer machine and (b) I'm a glutton for punishment I thought I'd try a newer version. Silly boy!. Had as much success as I did when I tried to upgrade the original 1998 imac - ie none. So it's back to the trusty 6.06 distribution. And it works. Runs fine. Possibly I need to buy another one to get more memory, or maybe I should break it up for parts, but at the moment I have 2 ppc imacs running linux. Which is perhaps a little excessive, but Hey, I had some fun and learned some things getting it running ...

Tuesday, 11 November 2008

Getting Data off an old computer

NASA and the 1973 Corolla fan belt

- It used a very nonstandard 3 inch disk format

- Its own word processor was not particularly compatible with anything else though third party export software did appear in time

Monday, 10 November 2008

Stanza from Lexcycle - a first look

Friday, 7 November 2008

mobile printing an the page count problem

Medieval social networking ...

At first it may seem a little odd , but bear with it it's quite fascinating. They took advantage, much as Ladurie did with Montaillou of extant medieval records to work out the network of social obligation. In this case they used 250 years worth of land tenancies (around 5000 documents) to work out the network of social obligation between lords and tenants, and its changes, between approximately 1260 and 1500, a period which encompassed both the black death and the hundred years' war. They also made use of supporting documents like wills and marriage contracts.

Sunday, 2 November 2008

drawing together some book digitisation strands ...

- The international trade in hard to find second hand books will die

- Someone somewhere will start a mail order print on demand service to handle people without access to espresso book machines

- e-readers will become more common for scholarly material- interesting or difficult texts will become solely print on demand or e-texts

- Mass market books will always be with us, economies of scale and distribution cancel the warehousing costs

a slice of migrant life

Monday, 27 October 2008

Genghis Khan and the optiportal

Explains Lin : "If you have a large burial, that's going to have an impact on the landscape. To find Khan's tomb, we'll be using remote sensing techniques and satellite imagery to take digital pictures of the ground in the surrounding region, which we'll be able to display on Calit2's 287-million pixel HIPerSpace display wall. ...

which sounds an interesting use of the technology for large scale visualisation work.

Wednesday, 22 October 2008

mobile printing (again!) ...

- Users can upload and print the files from anywhere

- Users can upload but defer printing of files until they are ready to print

- Users only need to login to the print management application once

- Users need to create pdf files

- Printing is not seamless - users need to login to print management application

- Users need to install and configure remote printer

- Users need to login to separate print management application to release print job

- User do not have the option of immediate print

- Printing is seamless

Monday, 20 October 2008

Shibboleth and the shared cloud

technology meets the electoral process

Saturday, 18 October 2008

Putting Twitter to work

So far so geek.

Now i have a real world application for it -providing live system status updates. One thing we lack at work is an effective way of getting information that there is a system problem out to people. Basically we email notifications to people.

However is we can apply sufficiently rigourous filters to the output from various montoring sysstems such as nagios we can effectively provide an input feed into a micro blogging service. This then produces an RSS output feed which people can then refactor in various ways elsewhere on campus.

And of course we can inject human generated alerts into the service and use our own tiny url service to pass urls of more detailed technical posts on a separate blog when we have a real problem,

Also, we could glue the feeds together, much as I have with this blog in a webpage where people can check and see if there is a problem, or indeed what's happening in the background - good when you have researchers in different timezones wanting to know what's going on, and it gives a fairly live dynamic environment.

All pretty good for a couple of hours creative buggereing about to get my head round Twitter ...

Wednesday, 15 October 2008

Optiputer

Tuesday, 14 October 2008

the twitter feed

As an experiment I think it's quite successful, if only for me to (a) keep a list on interesting links in my sent mail and (b) share interesting things I've been reading with anyone interested enough to follow this blog.

Of course it doen't have to be twitter and twittermail, various other microblogging services provide the tools to create a similar effect - here it's twitter and twittermail purely because I found the correct off the shelf tools in their software ecology.

Personally I think this anonymous sharing model is more useful than the social networking 'share with friends' closed group model - it allows people who may be interested in a topic and who follow your blog also to follow the links you find interesting. Social network providers want to of course use the links to help add value to people who are part of the network to keep them hooked, or sell targeted advertising to or whatever.

In fact it's probably almost worthwhile also providing a separate rss feed for the interesting links as it's not beyond the bounds of probablity that someone finds the collection of links more useful than the original blog.

bloglines.com

Likewise I've stuck with Bloglines as a blog aggregator in preference to google reader. In fact I've been using bloglines since 2004 which must mean something. And I've been happy with it - performance is rock solid, or rather was. Since the last upgrade it's claimed that feeds did not exist (including well known ones like guardian.co.uk) and if you re-add a feed it will work for a bit then stop. Response is poor compared to before the upgrade (being ever so anal I tend to read the feeds at the same time in the morning - so I think I can claim this even if it's anecdotal).

So one more bit of me has been assimilated by the borg - I've moved over to google reader. Not such an elegant interface, but more reliable, and given that my major reason for reading rss feeds is industry news and updates that's worth trading elegance for performance ...

Monday, 13 October 2008

not installing plan 9 ...

Tuesday, 7 October 2008

Nine months sans Microsoft ...

Now, I am not an anti microsoft zealot. Yes, I think Microsoft's business practices were not the best, but then all through the nineties and well into the first few years of the present decade there was no real alternative as a mass market desktop operating system - linux wasn't (isn't ?) there, and Apple seemed to lose the plot, and it took a long time to come back with OS X. The same in the application space. The competitors were as good if not better, and they took a long time a dying. Microsoft got where it was by either having products to which there was no serious alternative, or by convincing people that there was no serious alternative to Office and the rest. That was then and this is now, we work with the present reality.

Judi isn't an anti anything zealot as far as computers go. Computers are tools to her. Email, web, grading student reports, writing course notes and assignments and that's it. Providing she can send emails and buy stuff online, research stuff and get into the school email system she's fine.

Our decision for going microsoftletss was purely pragmatic. I can do most of what I can do with abiword, open office, google apps, firefox, zoho and pan, and I can do this on a couple of fairly low powered machines - an old ppc imac and a pc I put together for $83. Judi likes to play with digital photography, so we bought an imac purely because the screen was nicer. I though we might have to buy a second hand windows pc as well but that hasn't turned out to be the case. Firefox, safari, google apps and neo office have let her get her work done, even coping with the docx problem.

The only couple of problems we've had is with bathroom design catalogues (canned autorun powerpoint on a cd) and an ikea kitchen design tool. Things we could work around easily and turned out to be totally inessential. Other than that it's fine. Emails get written, appointments made, books bought, assignments graded, documents formatted.

So we've proved you can live without windows. We've also proved we could live with windows and not linux or OS X - no operating system has a set of killer apps for middle of the road usage.

And more and more we're using online apps - at what point does zonbu or a easy neuf bcome a true alternative? (and when do we get vendor lock in and all the rest in the online space?)

Sunday, 5 October 2008

what is digital preservation for?

Digital Archiving as preservation

Here one essentially wants to keep the data for ever, cross hardware upgrades and format changes. Essentially what one is doing is taking a human cultural artifact such as a medieval manuscript, an aboriginal dreamtime story as recorded and making a digital version of it and keeping the file available for ever.

This has three purposes:

1)Increased access - these artifacts are delicate and cannot be accessed by everyone who wishes to. Nor can everyone who wishes access have or can afford access. While the preservation technology is expensive access is cheap - this is being written on a computer that cost be $83 to put together. This also has the important substrand of digital cultural repatriation - it enables access to the conserved materials by the originators and cultural owners. Thus, to take the case of a project I worked on, Australian Aborigines were too impoverished to conserve photographs and audio recordings of traditional stories and music, digital preservation allows copies of the material to be returned to them without any worries about its long term preservation.

2) Long term preservation. The long term conservation of digital data is a 'just add dollars' problem. The long term preservation of audio recordings, photographs, is not. And paper burns. Once digitised we can have many copies in many locations - think clockss for an example design and we have access for as long as we have electricity and the Internet.

3) Enabling new forms as scholarly research. This is really simply an emergent property of #1. Projects such as the Stavanger Middle English Grammar project are dependent on increased access to the original texts. Without such ready access it would have been logistically impossible to carry out such a study - too many manuscripts in too many different places.

Digital archiving as publicationThis seems an odd way of looking at it but bear with me. Scholarly output is born digital these days. It could be as an intrinsically digital medium such as a research group's blog, or digitally created items such as the TeX file of a research paper or indeed a book.

This covers e-journals and e-press as well as conventional journals, which increasingly also have a digital archive.

These technologies have the twin functions of increasing access - no longer does one have to go to a library that holds the journal one wants, and likewise one has massively reduced the costs of publication.

Of course there's a catch here. Once one had printed the books and put them in a warehouse the only costs were of storage. These books were then distributed and put on shelves in libraries. Long term preservation costs was that of a fire alarm, an efficient cat to keep the depredations of rats and mice in check and a can of termite spray. OK, I exaggerate, but the costs of long term preservation are probably higher, in part due to the costs of employing staff to look after the machines and software doing the preserving and making sure that things keep running.The other advantage is searchability. One creates a data set and then runs a free text search engine over it. From the data comes citation rankings, as loved by university administrators to demonstrate that they are housing productive academics (e-portfolios and the rest) and also the creation of easy literature searches - no more toiling in the library or talking to geeky librarians.

Digital preservation as a record

Outside of academia this is seen as the prime purpose of digital preservation. It is a way, by capturing and archiving emails and documents of creating a record of business, something that government and business has always done - think the medieval rent rolls of York, the account ledgers of Florentine bankers, and the transcripts of the trial of Charles Stuart in 1649. While today they may constitute a valuable historical resource at the time they served as a means of

record to demonstrate that payment had been made and that due procedure had been followed.

In business digital preservation and archiving is exactly that, capturing the details of transactions to show that due process has been followed and because it's searchable, it's possible to do a longitudinal study of a dispute. In the old days it would have been hundreds of APS4's searching through boxes of memo copies, to day it's a pile of free text searches across a set of binary objects.

Digital archiving as teaching

When lecturers lecture, they create a lot of learning aids around the lecture itself, such as handouts, reading lists. The lecture itself is often a digital event itself with a PowerPoint presentation of salient points, or key images, plus also the lecture itself.

Put together this creates a compound digital learning object and some thing that is accessible as a study aid sometime after the event.

While one may not want to keep the object for ever one may wish to preserve either components for re-use or even the whole compound object as the course is only offered in alternate years.

However these learning objects need to be preserved for reuse, and in these

litigious times, to also prove that lecturers did indeed say that about Henry II and consequently students should not be marked down for repeating it in an exam.

Conclusion

So digital preservation and archiving has a purpose, four in fact. The purposes differ but there are many congruences between them.

Fundamentally the gains boil down to increased accessibility and searchability.

The commercial need for such archiving and search should help drive down the cost of preservation for academic and pedagogic purposes. Likewise academic research relevant for digitisation, eg handwriting recognition, and improved search algorithms should benefit business and justify the costs of academic

digital preservation.

Thursday, 2 October 2008

Viking mice, the black death and other plagues

Likewise, prior to the black death we know the population had undergone a fairly rapid expansion, and hence could support the large rodent population in towns required as a reservoir for the plague bacterium. It has been hypothesised that the Black death was not a significant problem until it ended up infecting the large urban populations of Alexandria and Constantinople as part of the Plague of Justinian in 548. Large population, seaport, lots of rats.

There's also recently been a suggestion that the same sort of thing has happened with HIV in Africa - it had probably always been there but until significant urbanisation in the twentieth century, with accompanying population densities and greater opportunity for random sexual encounters.

So what does this mean for seventh century Britain. It's often argued that there was a plague event that preferentrially devastated the sub-Roman communities in the west of the island rather than the areas under Saxon control, providing an opportunity for further expansion westward by the Saxons. Does this mean that the plague was in the population and the outbreak was a result of higher population densities in the west capable of supporting a plague reservoir or does it simply mean that continued contact with the Byzantine east exposed the sub-Roman population to greater risk of infection as they had ports and the remnants of urban communities surrounding the ports to form an initial entry point for the plague?

If the former it suggests that the sub Roman successor states were capable of holding their own despite the loss of a lot of the prime agricultural territory, but to make a decision we need to know more about trade patterns, for example were the subroman populations also trading grain with northern France ?

Tuesday, 30 September 2008

Putting some medieval digitisation strands together

Saturday, 20 September 2008

Adam of Usk and the Espresso book machine ...

As I've said elsewhere many times print on demand is the ideal solution for rare and obscure books and out of print titles. Basically all you need is a computer, a digitised version of the book, a printer with an auto binder. And the technology to do this is cheap, when a basic laser printer costs a couple of hundred dollars, and a rather more meaty one under a thousand.

And there's a lot of material out there, Project Gutenberg has been happily digitising old out of copyright texts, and now that many of texts have markup they can be processed easily and reformatted for republication.

And we see that publishers have begun to use this to exploit their backlists as in FaberFind. And certainly when I helped put together a similar project for a scholarly publisher, that seemed to be the way to go. No warehousing, no startup costs for printing, just churn them out when required, and only digitise and work on the original text when requested. That way while the first copy was expensive in terms of time and effort, any subsequent copy was free other than the cost of paper and toner.

Not e-texts?

Well once you've a digitised marked up text it's relatively easy to convert it into any of the format commonly used by bookreaders. Texts are hard to read and annotate on the screen, and I would assume so on a Kindle or Sony Book reader - I'm hypothesising here, I've never seen either of these devices - they're not available in Australia but clearly they are supposed to be the ipods of the book world. Anyway, while they may work for fiction or any other book read from beginning to end, I suspect that it's not quite got the utility of a book. And you probably can't read in the bath :-). An e-text reader that allows you to export the text to an sd-card and then take it to print and bind machine for backup or reference purposes might hit the sweet spot for scholarly work. That way you could have a paper reference copy and a portable version to carry around.

And Adam of Usk ?

Adam of Usk was a later fourteenth century/early fifttenth cleric, lawyer, historian and chroncler. If he'd been alive today he'd have been a blogger. He wrote a long rambling gossipy chronicle - part diary part history that covers a whole range of key events from the visit of the Emperor Manuel II of Byzantium to Henry IV of England to drum up support for Byzantium's war against the Turks, Adam's time serving on the legal commision to come up with justifications for the forced deposition of Richard II to the events of the Welsh Wars of Owain Glyndwr and the Peasnt's Revolt.

A book that you'd thing there'd be a Penguin classic edition of. Nope you're wrong. There's an 1876 translation (Adam wrote in Latin) and newer 1997 translation published at the cost of a couple of hundred bucks a copy - purely because this sort of book is probably only really of interest to scholars and the costs of short run conventional publishing are horrendous and self defeating.

Why there's no readily available edition is just one of these mysteries. Gossipy and rambling but then the Alexiad is not exactly a model of conciseness and tight structure. Bust basically there's no readilty available edition to dip in and dip out of. In short it's the ideal example of a text tailor made for print on demand publishing. Thhe thirty bucks than a print on demand copy would cost is a damn sight cheaper than the cost of even a tatty sceond hand version of the 1876 edition (cheapest I found was GBP45 - say a hundred bucks)

Friday, 12 September 2008

Peek email reader ...

what transcription mistakes in manuscripts might tell us

Java Toasters

Thursday, 11 September 2008

interesting twitter behaviour ...

Tuesday, 9 September 2008

Twitter ...

And then I had a thought. One thing I do do is skim blogs and online newsfeeds for things that interest me. And I've often thought about doing a daily post on today's interesting things. Instead I'll use twitter and either tinyurl or our in-house short form url service to post links to things I find interesting ...

ambient intimacy

Wednesday, 3 September 2008

SMC Skype WiFi phone

Tuesday, 2 September 2008

mobile printing redux ...

- User logs in to system

- System presents the user with a web page listing the user's files and an option to do an http upload of a file (analogy is the geocities website manager)

- Besides each non-pdf file we have two options - convert to pdf and print. All printing is done by converting to pdf and then pdftops, with conversion being done with either OpenOffice command line mode or abiword command line mode as appropriate and then print/export to produce the pdf. The analogy is with Zoho's or Google Doc's print and import options

- Pdf files have an option view or print, this means that users can check the layout before printing

- Printing is done by passing the print job through pdftops and then queing it to a holding queue with lprng.

Sunday, 31 August 2008

Byzantine links with post roman britain ...

Faberfinds

Some university and academic publishers have gone to a print on demand model, where the text is prepared for printing and copies are only printed as one offs as required, which in these days of cheap high volume laser printing is a really compelling way to go - no warehousing or inventory management costs.

Now comes news of Faber Finds - a mainstream UK publisher giving print on demand to its back list - basically you get a bound printed copy of a book from the back list on request. Of course this costs money, but it does provide an interesting change in the way of providing access to out of print texts, and incidentally to scanning and digitally archiving these books.