I have never been convinced by bibliometrics, viewing it as something between black magic and a shell game. The fact that Scopus is owned by Elsevier didn’t exactly help either.

It’s been my view that all these attempts to measure impact are flawed and incorporate unconscious bias against researchers who work at less prestigious institutions an perhaps do not publish mainly in English.

The reasons for this are complex, but I suspect it is in part because the reviewers and editorial boards tend to be drawn from a small number of anglophone institutions who tend to favour researchers working in institutions known to them.

And twenty years ago, they might have had a point. Computing resources were expensive and access to libraries and journals was difficult outside of institutions that did not take a full range of journals. (When I was a researcher forty years or more ago, I had an Inter Library Loan allowance to cover gaps in my home institution’s journal collection, but it did mean the process of reviewing the literature on a topic could be tedious as one waited for the loan article to arrive and then inevitably had to request another two.)

Nowadays it’s easier. Most laptops are powerful enough to run quite complex data analyses, R is public domain and a cornucopia of tools and techniques, online access to journals is relatively easy, and if there’s a problem getting hold of something, you can always hope the lead author is on Researchgate or Academia.edu and amenable to providing an electronic offprint.

But searching the literature is still much the same. One starts with a search engine and a query,

Usually it’s Google, but it could be Bing or Kagi, all of which make use of large language models, with Bing being the most reliant on a large language model.

Let’s say I was doing some fecal analysis at an archaeological site - old latrines and their deposits provide a host of information about what people ate, even if the contents are not the nicest to work with, and I had discovered a lot of raspberry seeds.

Raspberries do grow wild in Europe, so I might wish to know if they were cultivated, or gathered wild.

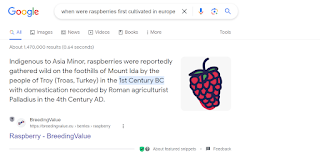

So a reasonable first query would be ‘when were raspberries first cultivated in Europe’

I’d expect then to search for sources for the results, perhaps the results of other fecal analyses, but as part of the search process, the first results would be crucial. I ran the same query on the AI Enabled Bing, Google, Kagi, and as a control on the old school Yandex search engine.

The results are, shall we say, inconsistent.

Bing, even though it quotes a less reliable source is possibly the most accurate. There’s a lot of evidence for fruit cultivation starting in monastery gardens across Europe from the 12th century onwards.

Kagi is helpful, Google less so and Yandex simply goes for wikipedia, whch is as good a solution as any.

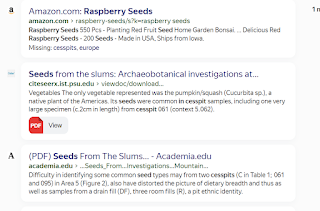

None of them mentioned cesspits, so I reran the exercise specifically mentioning cesspits in the hope of getting more focused results.

When asked about raspberry seeds being found in cesspits most of the found the same content although only Kagi found detailed research, although Bing made a creditable attempt.

And what does this mean for research citation?

I’m not sure, but the differences suggest that the various large language models have biases, and probably it’s best at the moment to run literature searches on multiple search engines ...